In working with AI, I’m stopping before typing anything into the box to ask myself a question : what do I expect from the AI?

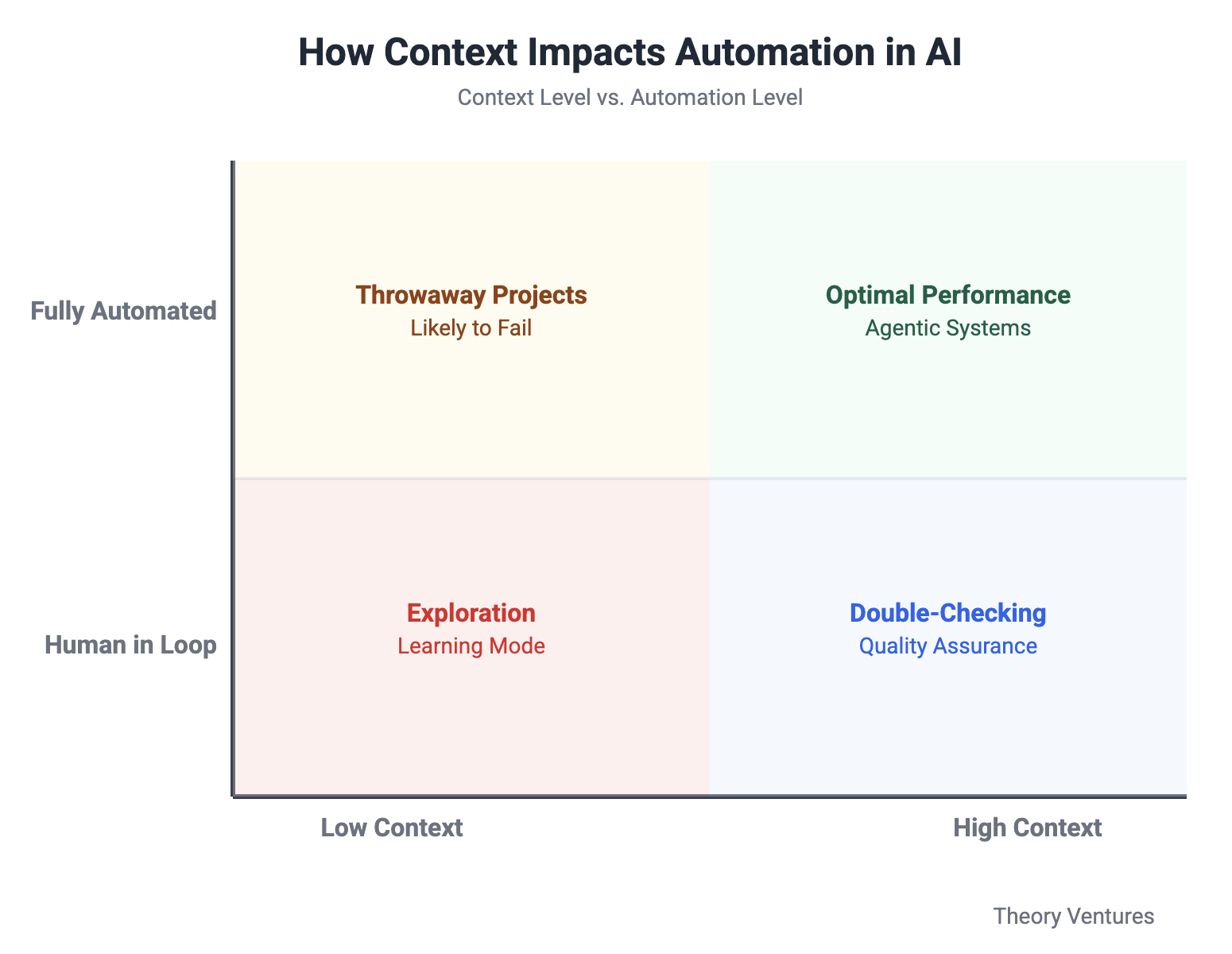

2x2 to the rescue! Which box am I in?

On one axis, how much context I provide : not very much to quite a bit. On the other, whether I should watch the AI or let it run.

If I provide very little information & let the system run : ‘research Forward Deployed Engineer trends,’ I get throwaway results: broad overviews without relevant detail.

Running the same project with a series of short questions produces an iterative conversation that succeeds - an Exploration.

“Which companies have implemented Forward Deployed Engineers (FDEs)? What are the typical backgrounds of FDEs? Which types of contract structures & businesses lend themselves to this work?”

When I have a very low tolerance for mistakes, I provide extensive context & work iteratively with the AI. For blog posts or financial analysis, I share everything (current drafts, previous writings, detailed requirements) then proceed sentence by sentence.

Letting an agent run freely requires defining everything upfront. I rarely succeed here because the upfront work demands tremendous clarity - exact goals, comprehensive information, & detailed task lists with validation criteria - an outline.

These prompts end up looking like the product requirements documents I wrote as a product manager.

The answer to ‘what do I expect?’ will get easier as AI systems access more of my information & improve at selecting relevant data. As I get better at articulating what I actually want, the collaboration improves.

I aim to move many more of my questions out of the top left bucket - how I was trained with Google search - into the other three quadrants.

I also expect this habit will help me work with people better.