Philip Schmid dropped an astounding figure1 yesterday about Google’s AI scale : 1,300 trillion tokens per month (1.3 quadrillion - first time I’ve ever used that unit!).

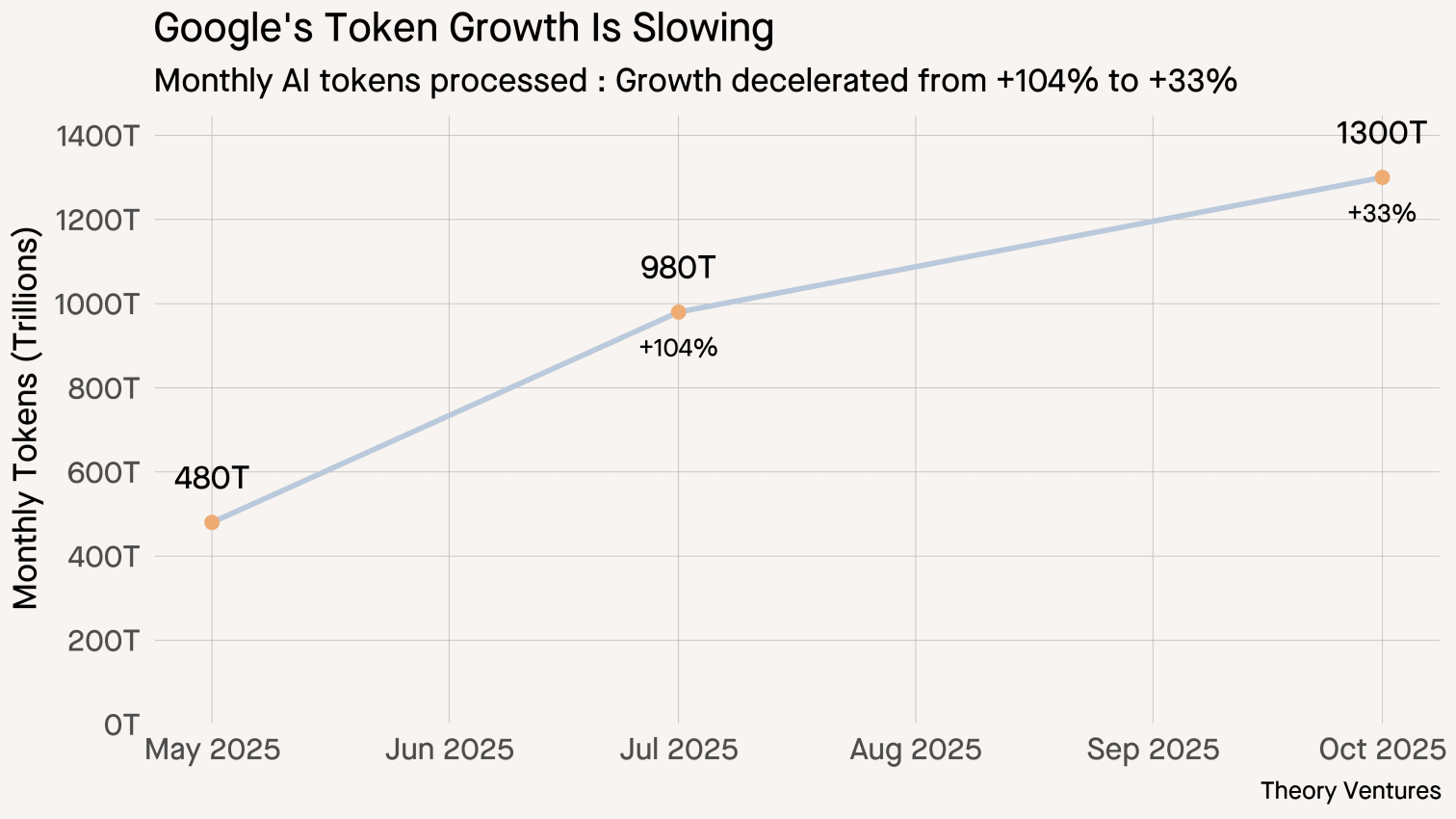

Now that we have three data points on Google’s token processing, we can chart the progress.

In May, Google announced at I/O2 they were processing 480 trillion monthly tokens across their surfaces. Two months later in July, they announced3 that number had doubled to 980 trillion. Now, it’s up to 1300 trillion.

The absolute numbers are staggering. But could growth be decelerating?

Between May & July, Google added 250T tokens per month. In the more recent period, that number fell to 107T tokens per month.

This raises more questions than it answers. What could be driving the decreased growth? Some hypotheses :

-

Google may be rate-limiting AI for free users because of unit economics.

-

Google may be limited by data center availability. There may not be enough GPUs to continue to grow at these rates. The company has said it would be capacity constrained through Q4 2025 in earnings calls this year.

-

Google combines internal & external AI token processing. The ratio might have changed.

-

Google may be driving significant efficiencies with algorithmic improvements, better caching, or other advances that reduce the total amount of tokens.

I wasn’t able to find any other comparable time series from neoclouds or hyperscalers to draw broader conclusions. These data points from Google are among the few we can track.

Data center investment is scaling towards $400 billion this year.4 Meanwhile, incumbents are striking strategic deals in the tens of billions, raising questions about circular financing & demand sustainability.

This is one of the metrics to track!