The Scaling Wall Was A Mirage

Two revelations this week have shaken the narrative in AI : Nvidia’s earnings & this tweet about Gemini.

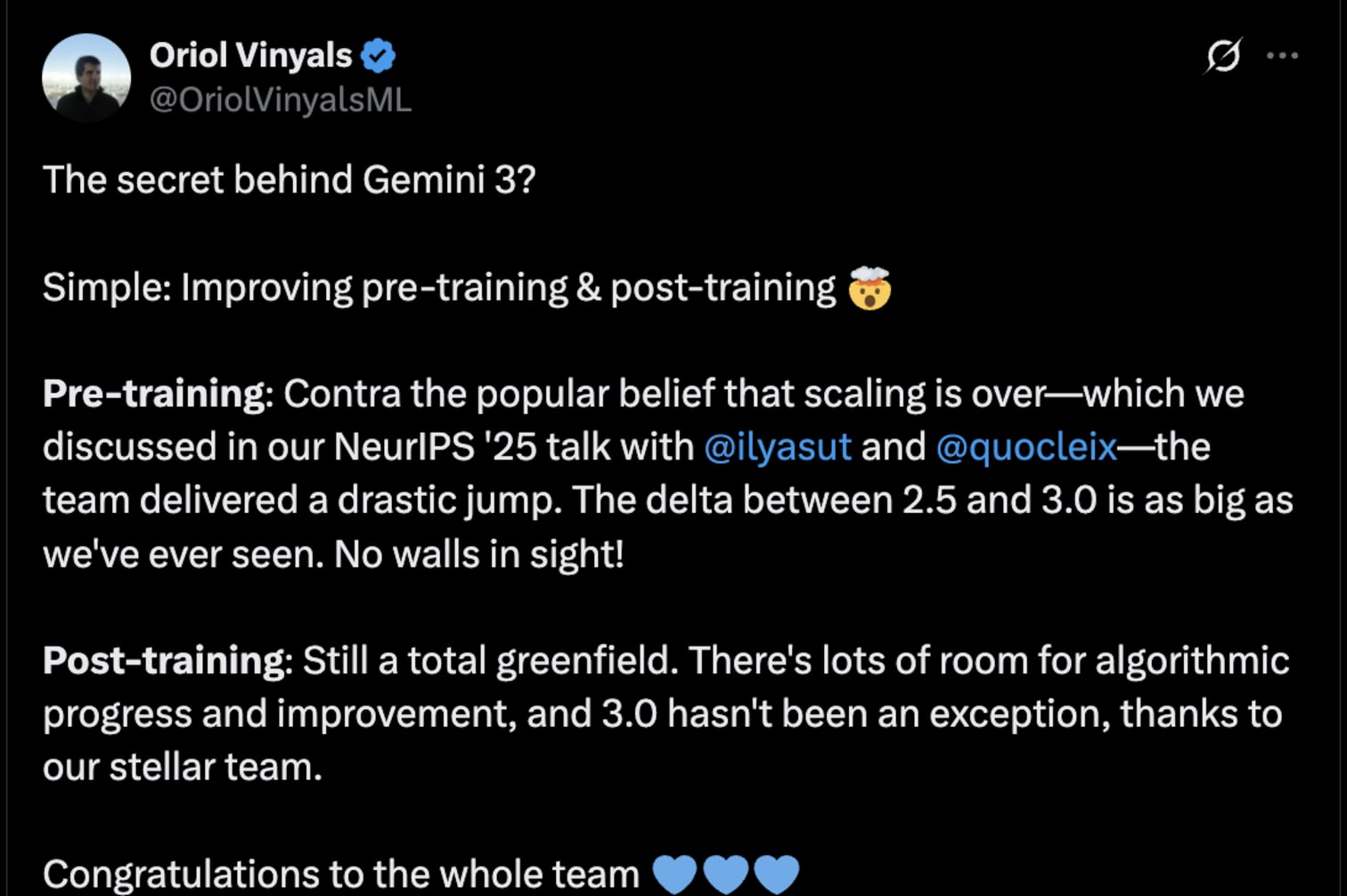

The AI industry spent 2025 convinced that pre-training scaling laws had hit a wall. Models weren’t improving just from adding more compute during training.

Then Gemini 3 launched. The model has the same parameter count as Gemini 2.5, one trillion parameters, yet achieved massive performance improvements. It’s the first model to break 1500 Elo on LMArena & beat GPT-5.1 on 19 of 20 benchmarks.